The Risk Competency Illusion

How Artificial Intelligence Could Transform Risk Expertise from Theater to Science

Our capacity to survive and thrive as a species has undergone a remarkable transformation over the past century. Life expectancy has doubled, workplace fatalities have plummeted, and countless diseases that once decimated populations have been virtually eradicated. Yet this remarkable progress stems not from rapid evolutionary adaptation to the modern risk landscape, but from the systematic suspension of individual choice—through public health mandates, safety regulations, and technological guardrails that often override our instinctive preferences.

The same species that initially resisted seat belt laws and smoking bans now lives twice as long, protected by a web of policies and technological systems that quietly circumvent our limited risk intuitions.

This presents us with two compelling paradoxes. First, many of our greatest survival advances have come not through natural selection of enhanced survival instincts, but by creating systems that bypass them entirely. Second, and perhaps more troubling, while we've acknowledged our cognitive limitations in some domains, we continue to venerate professional "risk expertise" across numerous high-stakes fields, where the actual predictive value of human judgment remains startlingly poor.

Medicine and meteorology offer compelling examples of how risk assessment can evolve beyond intuition and anecdote. Both fields have transformed from relying on clinical judgment and folk wisdom to achieving remarkable predictive accuracy through the development of robust scientific foundations. Their success stems from a rich ecosystem of peer-reviewed research, accredited academic programs, and professional organizations that maintain rigorous standards. A meteorologist's precise hurricane landfall prediction or an algorithm's early detection of disease patterns represents genuine progress in risk assessment, achieved not through management consulting sleight-of-hand, but through the patient building of scientific infrastructure, standardized methodologies, and technological tools that augment human judgment.

This stands in stark contrast to what we might call "risk intelligence theater"—a phenomenon prevalent across numerous risk-centric professions like security, intelligence, or even venture capital, where we often find elaborate frameworks and sophisticated-looking matrices that mask fundamental methodological weaknesses. These fields have largely adopted the trappings of management consulting—erudite language, fanciful graphics, and impressive-sounding frameworks—while offering the same predictive value as ancient Roman augurs reading bird entrails.

Geopolitical risk intelligence traffics in erudite storytelling that would make CNBC's market pundits proud—offering narratives about sociopolitical events and business threats with the same confidence as Squawkbox hosts explaining yesterday's stock movements. Like their financial media counterparts, study after study reveals their forecasting accuracy rarely exceeds that of informed amateurs or simple algorithms. These experts face no accountability for their predictions and rarely submit their analysis to post-mortem validation, yet continue to command premium fees for their authoritative-sounding prognostications.

Corporate risk management epitomizes this theater with its pseudo-quantitative risk matrices—arbitrary scales and ratings that have been proven to mislead rather than inform decision-making. This is particularly pernicious because most stakeholders lack the statistical literacy and domain expertise to distinguish between legitimate risk intelligence and eloquent nonsense. Instead, we default to social proof, measuring expertise through market success or institutional adoption rather than actual predictive accuracy.

This challenge has become particularly acute in our current era, when trust in the institutions that enabled the 20th-century survival revolution is rapidly eroding. In today's social media landscape, legitimate researchers and subject matter experts must compete for attention with YouTube influencers, TikTok personalities, and LinkedIn ‘thought leaders.’ The resulting information anarchy leaves the public adrift, struggling to distinguish between evidence-based analysis and compelling storytelling. When it comes to understanding and navigating risk, this crisis of epistemic authority has potentially lethal consequences.

This recognition led me to develop the concept of safe-esteem—and a multi-disciplinary framework for understanding and improving personal risk judgment and decision-making capabilities.

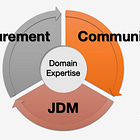

Through a startup of the same name, we're focused on merging critical Risk Judgment and Decision-making (RJDM) competency domains—measurement, communication, and cognition—while exploring how Artificial Intelligence could drive a transformation as profound as the shift from folk medicine to evidence-based healthcare, but at the individual level.

Risk judgment is fundamentally a forecasting exercise, and ML/AI systems are inherently designed for prediction—making them powerful tools to enhance human decision-making and improve risk assessment.

The current discourse around AI focuses heavily on potential catastrophic risks, often displaying the same cognitive biases and methodological flaws we've critiqued in professional risk analysis. This obscures a compelling alternative perspective: AI represents humanity's most promising tool for evolving our personal and collective survival operating system.

AI offers us superhuman abilities to process vast amounts of data, tap into deep and diverse knowledge domains, tailor messaging individually, identify and reduce cognitive biases, and act as a heuristics optimization engine (data or statistics are not always the best basis for decision-making) —all at near-real-time speeds.

Through training and coaching, surveys and interviews, industry events, and even social media posts, l occasionally prod pundits and senior decision-makers to pull back the curtain on various professional domains and questionable high-profile risk conversations, exposing how their methodologies and pronouncements often reinforce rather than resolve our cognitive biases and flawed decision-making. While the critique might seem harsh, it serves a constructive purpose: elevating our safe-esteem and pointing toward genuine advances in how we assess and understand risk in the age of AI.

Here are some of posts in this series:

How AI Can Elevate Risk Judgment and Decision-Making

AI is already measurably advancing natural hazard forecasting, medical diagnostics, and drug discovery and development—domains representing humanity's oldest survival challenges. Yet in high-income nations, where poverty and access are less problematic, most casualties stem not from 'acts of God' but from preventable causes: poor risk awareness, innumer…

AI Risks Fallacies Deep Dive #1

On the #AIXrisks series: Artificial Intelligence (AI) risks have quickly replaced COVID-19 as the deeply misguided yet widely embraced Risk Judgment and Decision-Making (RJDM) domain. As such, it presents a unique opportunity to shed light on the inadequacies of our risk discourse. Safe-esteem will not become an AI-focused publication, but we will addre…

Very interesting read. It’s a wake-up call on how we trick ourselves into feeling “in control” of risk and competence. Sometimes we’re so sure we’re right that we skip the reality checks. Best takeaway: stay humble, ask more questions, and keep testing until you’re certain—not just confident.